I've been trying to get raw temperature date from the BOM website. Remember, this is data whose collection we as taxpayers have financed over the years: from thermometers behind old post offices in one-horse towns in the 19th century, all the way to modern computerised weather stations. But we paid for it, just as we pay the salaries of the alarmists who populate what is laughingly called a bureau of "meteorology".

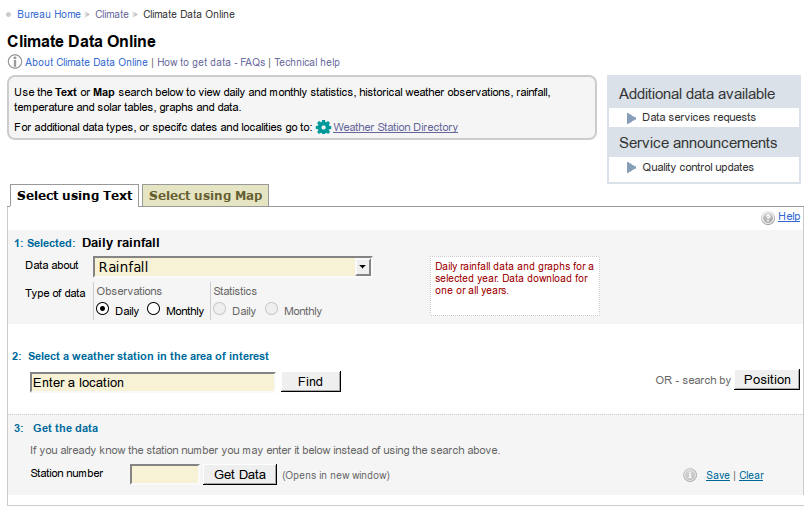

So let's see what the site looks like:

Look at "1: Selected": that always comes up "Daily rainfall" and has to be reselected for max or min temperatures (they have to both be downloaded separately). Then you get to enter a location, and click "Find":

Look at "1: Selected": that always comes up "Daily rainfall" and has to be reselected for max or min temperatures (they have to both be downloaded separately). Then you get to enter a location, and click "Find":

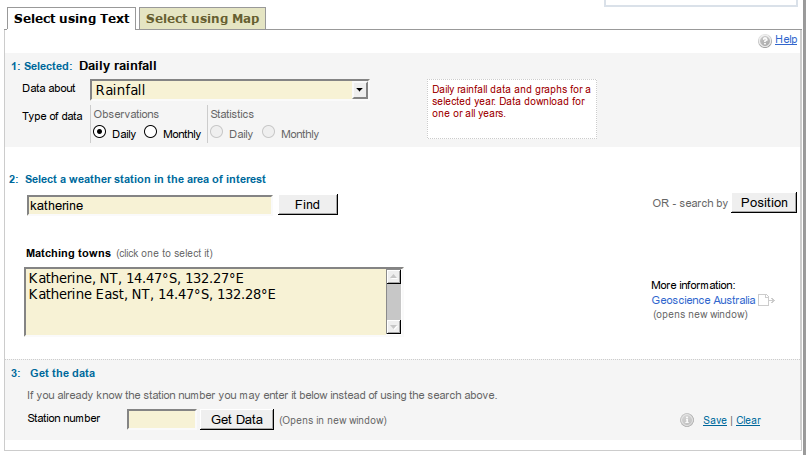

Now we get to select the town. Let's try Katherine East:

Now we get to select the town. Let's try Katherine East:

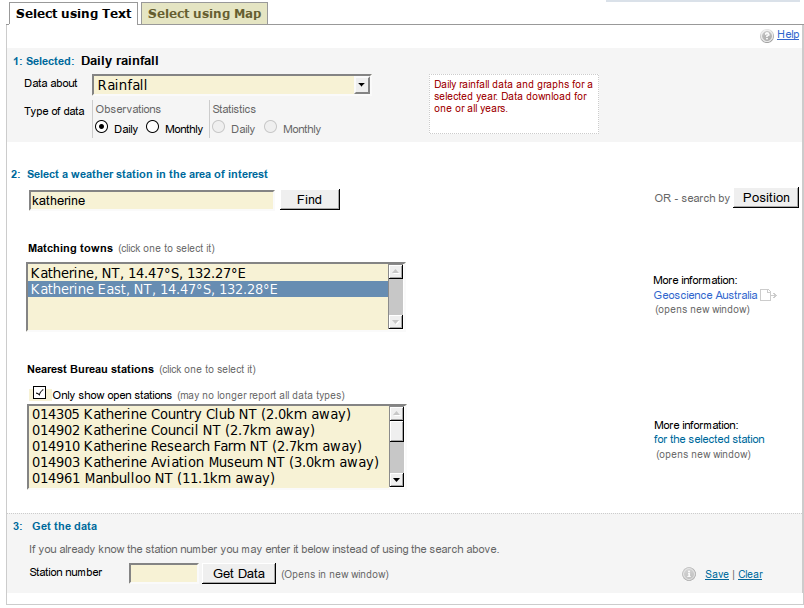

And since we want historical data (so we can do a better job than BOM and go back past 1910 - or even just to check BOM's post-1910 work on the older stations, we have to deselect that tick in "only show open stations". Yet another click. But let's select Katherine Council NT:

And since we want historical data (so we can do a better job than BOM and go back past 1910 - or even just to check BOM's post-1910 work on the older stations, we have to deselect that tick in "only show open stations". Yet another click. But let's select Katherine Council NT:

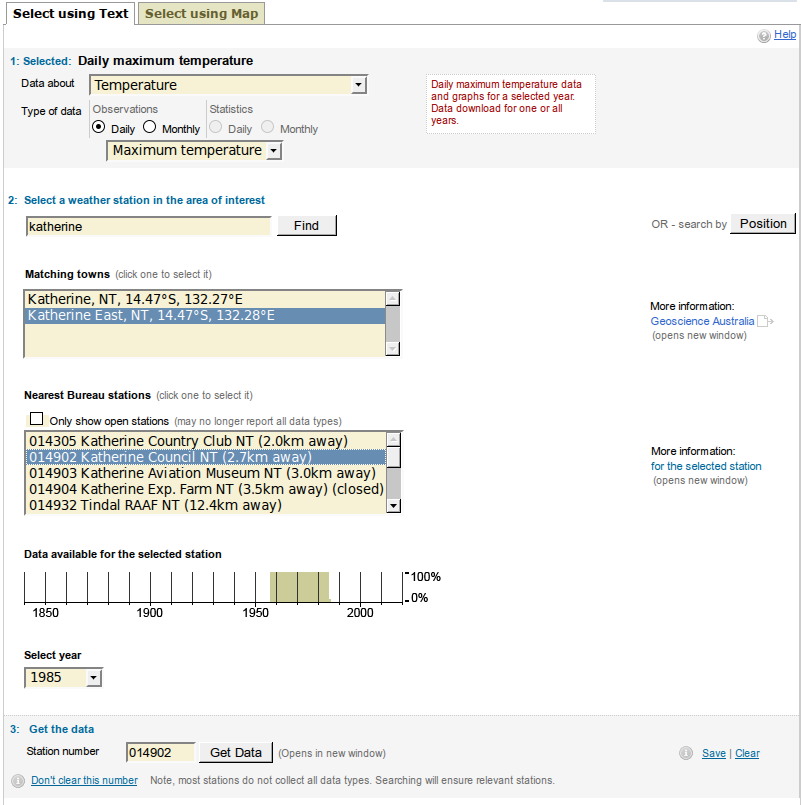

Now we can see the available data range, the station number is filled in, and we can "Get Data":

Now we can see the available data range, the station number is filled in, and we can "Get Data":

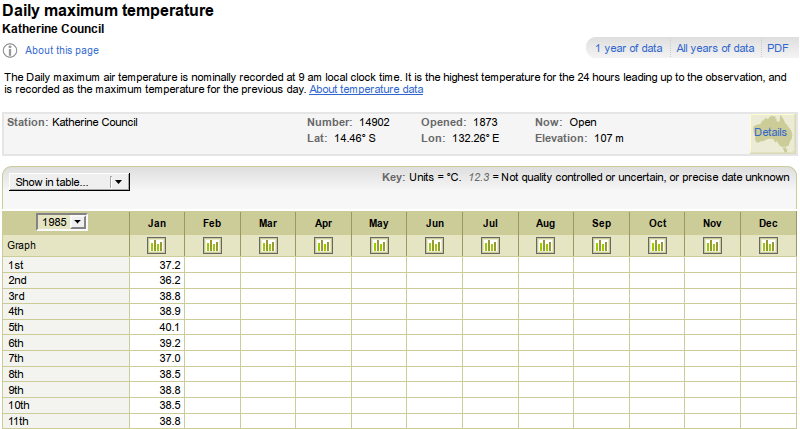

I am only showing the top of the page - it rolls on for a while - because we are interested in that "All years of data" link in the top right corner. That, at long last, is the link to the actual data file that we need to do any sort of analysis on the BOM's figures. Let's hover over it and see what it says:

I am only showing the top of the page - it rolls on for a while - because we are interested in that "All years of data" link in the top right corner. That, at long last, is the link to the actual data file that we need to do any sort of analysis on the BOM's figures. Let's hover over it and see what it says:

http://www.bom.gov.au/jsp/ncc/cdio/weatherData/av?p_display_type=dailyZippedDataFile&p_stn_num=014902&p_c=-44463075&p_nccObsCode=122&p_startYear=1985

If we click on it, sure enough, we get a download link that gives us a zip file containing two files: some text notes, and a ".csv" file that can be read into a speadsheet or processed with our own code. But how much of it is really necessary? We can paste that URL into our browser manually and delete bits. Sure enough, it needs the bit that says:

p_display_type=dailyZippedDataFile

because otherwise it wouldn't know the format we want. It needs the bit that says:

p_stn_num=014902

because otherwise it wouldn't know which station we want. But surprisingly, it ignores the parameter that says:

p_startYear=1985

You can delete that one without harm. In fact, a closer look shows that it is wrong anyway. This station has no data after 1985 - it opened in 1957. And that might make us wonder why it is described as open, but that's the least of our worries. Continuing, it also needs the parameter:

p_nccObsCode=122

because that one tells it which data set we want - in this case, maximum temperatures. But now the really interesting one. Before we look at it, let's focus on one very important fact about our investigation above: except for the startYear parameter, which isn't needed, all the others are predictable: the type of data file, the station number, and the observational dataset we want, are all predictable and programmable.

We could write a program to capture the entire temperature record for Australia in one easy step. It's all data we as taxpayers paid for, and we have every right to have it.

We could, that is, except for the one and only extra parameter, the bit that says:

p_c=-44463075

This is the only parameter with a meaningless name. Its contents bear no relation to the data being requested, which is completely specified by the other parameters. Despite looking at many examples for different requests, no pattern is apparent in this number.

Now there are two possibilities for why this field even exists: One is that the BOM are incompetent. I already had reason to believe this, so I assumed this was the reason for the code; I didn't think any deeper at first. Presumably BOM's programmers had some extra requirement for a database index or something, and rather than calculate it properly, they just passed it around in the html request. It's rather sad that our BOM is populated by nincompoops, but if this is the reason for this field, at least it is honest.

But then, some days later, I noticed that all the funny numbers had changed; a request that worked earlier no longer did so, and the funny number had to be recalculated.

This would appear to rule out my first assumptions, and it brings us to the second possibility for the existence of this field: it is there to guarantee that requests for data have to be made by manually clicking through all the options I have shown above, and to ensure that no automated program can download this public data, bought and paid for by taxpayers.

If the second possibility is the real one, BOM would appear to hope that by being devious, their manipulations of this data in the temperature record cannot be checked. If this is the real reason, then BOM have devoted extra resources, at extra cost to the paxpayer, to developing an obstruction against us, the taxpayers, getting climate data that we have every reason to see, and, in view of the many strictures being placed upon us in the name of preventing "global warming", we have every moral right in the world to see and analyse for ourselves.

This is especially true given that BOM, instead of remaining a disinterested meteorological service, has become an active player in the "climate change" scare.

Frankly, it's a scandal.

Re: Australian Bureau of Meteorology (BOM) obstructing ...

i have never thought that these things work like this. and there is the loophole which help to make the scandals.

Re: Australian Bureau of Meteorology (BOM) obstructing ...

I'm not sure; it looks more to me like some form of 'security' to avoid cross-site requests by having a unique 'token' in the requests.

Regardless, there's an easier way to do it:

http://www.bom.gov.au/climate/data/stations/

Search for the station you want, and you can pull a list of all stations within up to 250km of the selected station, with links in a table to directly download the data. (Note that for some types of data it will say 'available at a cost', but for at least the daily mins and maxes it should be free.)

If you want to go one step further, you can simply enter the search directly in your browser:

http://www.bom.gov.au/jsp/ncc/cdio/weatherStationDirectory/d?p_state=&p_...

This will return you a list of stations and their code, and in the last column is the code that you need for the p_c parameter. From this, it should be possible to automate the downloads.